This is the second post in our “Small Business Series” where we are interviewing a few industry experts on how small businesses can better leverage their strengths. Today we are interviewing Ken Hilburn, from

Note: As a bonus, Ken has generously shared that survey with us.

Romy Misra (Pear Analytics) : First Ken, thanks so much for taking the time to do this. The importance of surveying your customers has been repeatedly talked about. Why do you think it is important to survey your online customers/visitors?

Ken Hilburn: I have to start off by saying that Juice Analytics has an awesome community and we love talking, listening and working with them. But even with such a strong group, it’s critical to talk less and listen more.

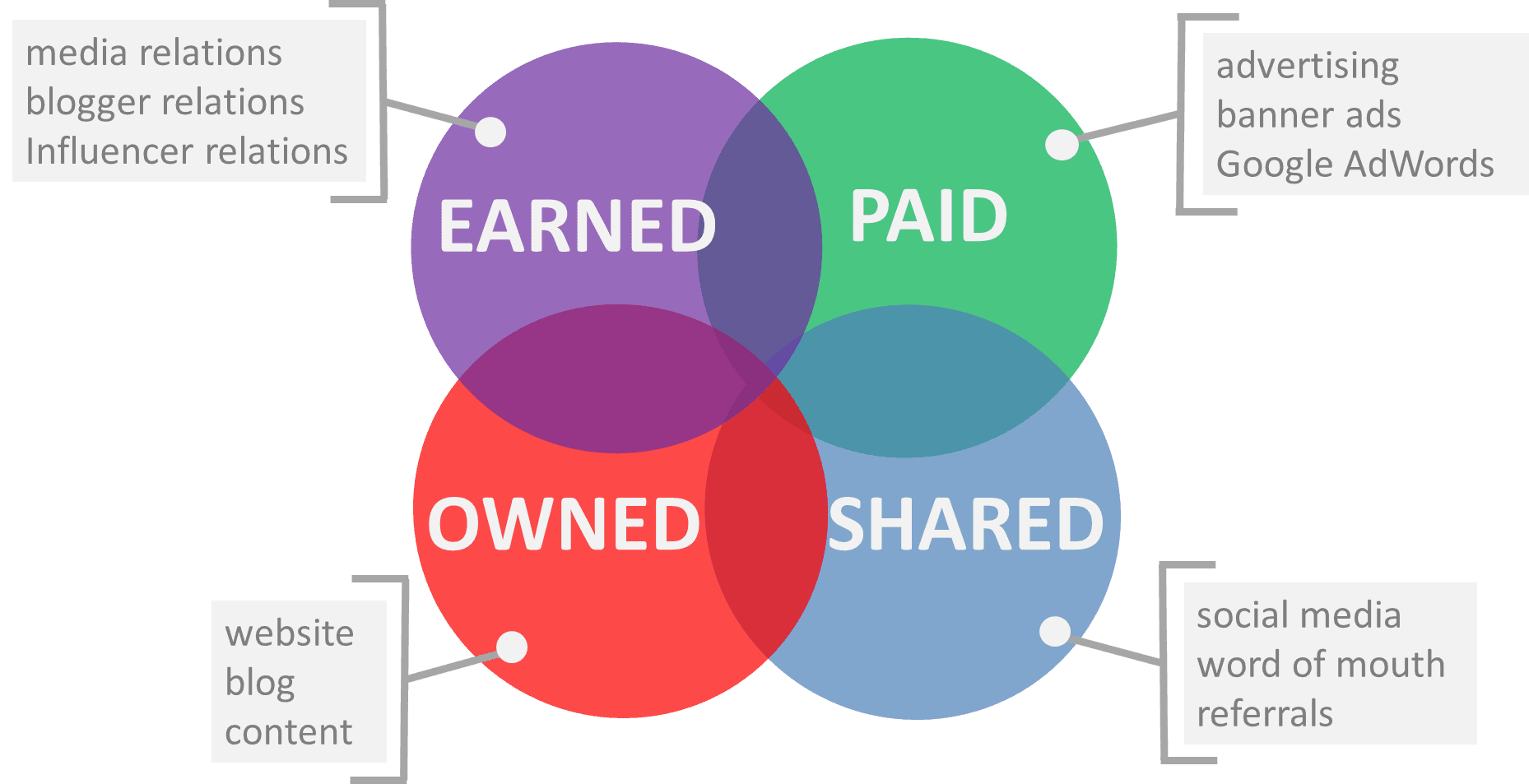

It used to be, for the most part, that customers were at the mercy of vendors when it came to communication – it was pretty much a one-way street. However, that’s changing now. With the pervasive use of social media sites, there has been a tremendous shift toward “power to the people.” It’s certainly in a company’s best interest at this point to make sure they’re in touch with their customers and how well their customer’s needs are being met. We get to choose: we can either do that proactively, or we can wait to see it on the twitter “Popular Topics” list and hope it’s positive.

Romy Misra (Pear Analytics) : The existing ways of inviting your customers to take a survey can be interrupting, generally in the form of annoying pop ups. What in your experience have been the best ways for asking your customers to participate in a survey?

Ken Hilburn: At Juice Analytics, we’ve always tried super hard to be as un-intrusive as possible to the user’s experience. It’s baked into the way we interact on every level with our community and clients from mailing lists, to surveys, to our applications. We believe that if you create valuable product (be it information, services, or software) people will proactively engage – Seth Godin has been great at reminding us you shouldn’t have to yell at someone to get their attention.

When it came to our recent survey on the whether or not we were helping our fans be more effective in their companies, we decided to use two primary channels for requesting feedback. The first was to post a link to the survey on our blog, which has a very broad and strong following. The second channel was to send an email to those who had opted in to receiving information from Juice. We didn’t believe using pop-ups on the website for survey participation was warranted. Pop-ups are annoying and should only be used in cases where you need to collect information from an otherwise unreachable audience regarding a very specific, quick turn-around topic.

Romy Misra (Pear Analytics): Avinash Kaushik has talked widely about limiting your survey to 4 important questions using the 4q model. Are there any ‘best survey practices’ one should follow?

Ken Hilburn: We’re big fans of Avinash and what he does. And that’s no different when it comes to his 4Q model. However, our survey objectives are a little different from what the 4Q model was intended to address. For instance, in our most recent case we were more interested in learning about the general information visualization environment in our community’s companies, rather than user experience on the web site. As a result, here are the principles we used when constructing our survey:

* Participants have to have the option to avoid it altogether (see question 2).

* It has to be short enough that someone can complete the whole thing in less that 5 minutes – preferably less than 3… or 1.

* The final results have to not only be useful by Juice, but also useful by the participants. We wanted this criteria since we were going to offer the results back to the community.

* Above all, it has to be fun. Juice believes that many see our domain as dry and boring. So, we try very hard to make our community engagements friendly and entertaining. We saw this survey to be the perfect challenge for us to turn the “ultimate boring” into something people had fun doing. Based on the feedback (and responses) that we received, we achieved our objective.

Romy Misra (Pear Analytics): Can you share with us your ideas of how to keep your customers engaged during a survey?

Ken Hilburn: We’re living in an age where attention spans are getting shorter and shorter. Folks will only do something as long as they want to. We used several concepts to encourage completion:

* We used a personal, friendly tone to try to get our participants comfortable with the survey.

* Attempted to get participants to look forward to the next question by by transforming typically boring questions into interesting ones. For instance, the very first question was about company size. Instead of asking “is your company 1000-5000 employees”, we asked:

In terms of size, which of the following is your company most like?

- A one man band

- The Dirty Dozen

- The University of Rhode Island

- Microsoft

Finally, questions have to be worded in an easy to read, understand and respond to fashion. When people start having to think about their responses, their progress slows and they start to bail on the survey.

Romy Misra (Pear Analytics): Could you share your experience at Juice Analytics of surveying your readers and what you guys did to achieve a success rate?

Ken Hilburn: Since our readers are engaged because they’re interested in how to look at data in innovative ways, we promised to display results using alternative charting styles. To do this, we had to break the #1 survey rule: stay away from text based answers. In this survey, about half of our questions were text based (as opposed to multiple choice). This required more effort on the back end from us, but think the benefit we got was better participation.

As I mentioned earlier, we posted the survey on our blog first and then did an email invitation to participate. With respect to the email, we had an over 35% open rate, a 45% click rate and a 60+% completion rate. The overall result was that we had over 500 respondents. Again, we have an awesome community that really supports us and the work we do. As a result we treat them with a great deal of respect and try to make our communications feel very personal. People like this.

Romy Misra (Pear Analytics): There are so many survey tools out there. Which one(s) would you recommend using?

Ken Hilburn: There are a lot. There’s even some good ones (ha!). I think selecting a tool has to be based on what you’re trying to accomplish. Here’s the criteria we used:

* Had to support a wide variety of question types (like rating, ranking, and conditional sequencing)

* Questions had to be easy to enter into the system (spend your time _creating_ a great survey, not administering it)

* The presentation of questions to the participants had to be in format that achieves our very high standard of aesthetic quality.

* The final results had to be easily exportable in their entirety since we wanted to perform some in depth analysis and visualization.

* We preferred that it integrate with our email marketing tool.

We’ve tried several different methods of collecting user information. The first and most flexible is Google Apps forms. We like this because the results get populated directly into a Google spreadsheet which is then easy to manipulate results. We also like Survey Monkey because it’s super simple and quick to set up. For our most important surveys, we have used Constant Contact’s offering because it allows us to target and track our audience more effectively.

Romy Misra (Pear Analytics): What is your recommended ideal number of participants in a survey which should enable a company takes action on the feedback gained (eg: make changes to a site, add features to a product etc) ?

Ken Hilburn: Being the engineer that I am, I’d love to say: “100% of your user base, of course.” But we all know that’s not really a reasonable expectation. I’d have to say that it has to be a high enough percentage of your user base that you feel there’s a justification for change. However, I’d also say that when it comes to changing the way your customers engage with your site or product, proceeding based solely on a survey should be done cautiously. Once a survey indicates that a change might be warranted, try some A/B testing or more focussed user group sessions to confirm your assumptions. These days both of these options are becoming much more affordable. In the long run, you’ll see a much more positive improvement.

———————————————————————————————————————————————————————————

Ken works with the Juice community and customers to help them better understand how to incorporate information presentation best practices into their world. He’s known for applying his structured approach and deep customer experiences to enable clients to implement targeted information solutions.

Once again, check out the totally awesome survey that Juice Analytics created to engage customers. We’ll definitely be trying some of these tactics out with our customer surveys in the near future.