Have you ever been to a website that takes forever to load? What do you do?

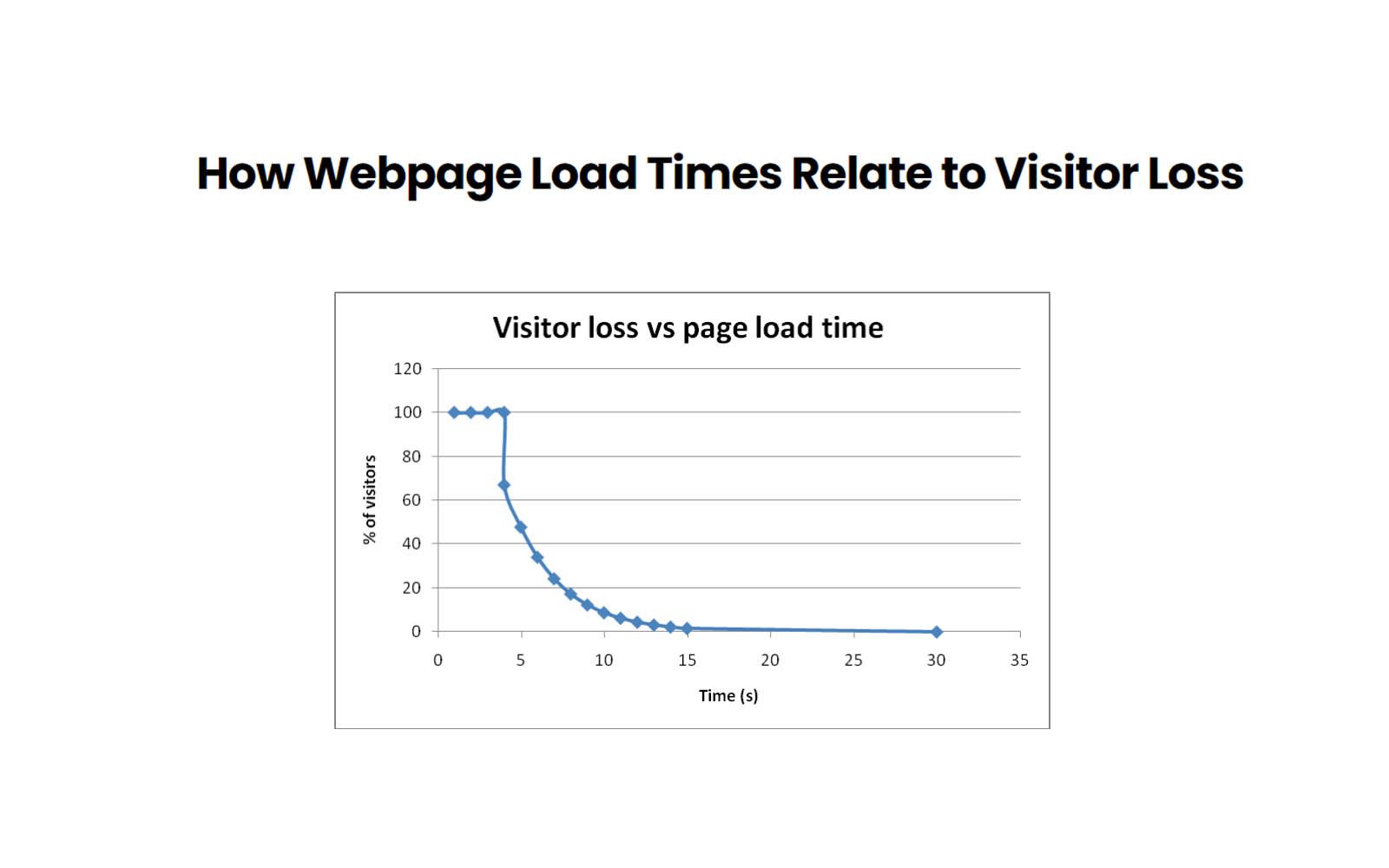

We’ve taken some past research and developed a way to determine how many visitors you could potentially be losing based on your webpage load time ranging from 0-30 seconds. This was not easy – only a couple of studies have actually been done, and not only are they “aging”, but they have also been controversial and only up to around the first 4 seconds of load time data. Obviously, there are many factors involved in determining how long you are willing to wait for a page to load, but with tabbed browsing, faster connections speeds, and more, maybe this is why a real study has not been done since 2006.

Here are some key takeaway points from the research we were able to come up with:

– Zona research said in 1999 that you could lose up to 33% of your visitors if you page took more than 8 seconds to load.

– Akamai said in 2006 that you could lose up to 33% of your visitors if your page took more than 4 seconds to load on a broadband connection.

– Tests done at Amazon in 2007 revealed that for every 100ms increase in load time, sales would decrease 1%.

– Tests done at Google in 2006 revealed that going from 10 to 30 results per page increased load time by a mere 0.5 seconds, but resulted in a 20% drop in traffic.

Wow. A half of a second? Is that even enough time to take a breath? Yet, when browsing, most people will lose patience and leave your website before they even have time to breathe. How this relates to e-commerce sites is pretty important. If your website is selling a fairly generic item, your site had better load pretty damn fast or you just lost your sale to some other guy. At Christmas, when every parent is looking for this seasons must have toy, better hope your website loads in under 2 seconds. When a husband forgets his anniversary and is quickly looking for a flower delivery place while the boss isn’t looking, your pictures better not be too big and take forever to load.

So how long does your webpage take to load? Check out Pingdom.com/Tools, and then come back here and approximate your potential visitor loss:

If you prefer to “geek out” and read our entire white paper, you can download it here. (I will warn you that it does mention words like “mathematical model”, “radioactive first oder decay” and “non-linear regression”.)